StorONE Blog

Check Out Our Latest Tips In Using Storage Technology Most Effectively

ONEai: When AI Meets Enterprise Storage – Finally, the Right Way

By Gal Naor, CEO of StorONE Enterprises looking to embrace AI often run into a harsh reality: astronomical costs, technical complexity, and a loss of control over sensitive

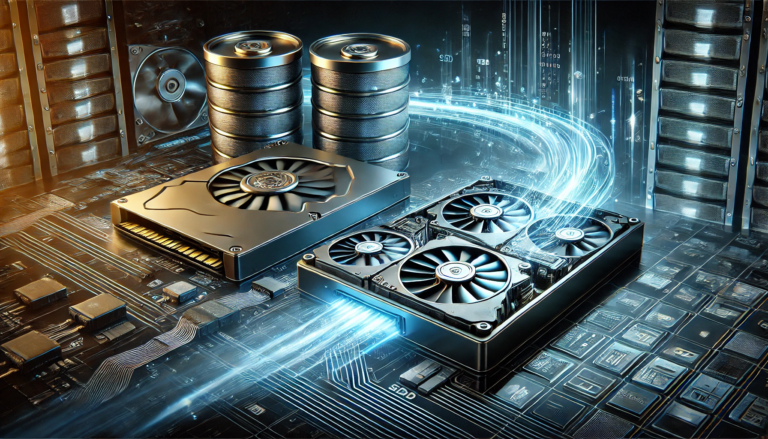

Hybrid Storage – A Balance

February 20, 2025

By James Keating, StorONE Solution Architect Hybrid storage has been a concept that has been around since the beginning of storage. The concept is simple:

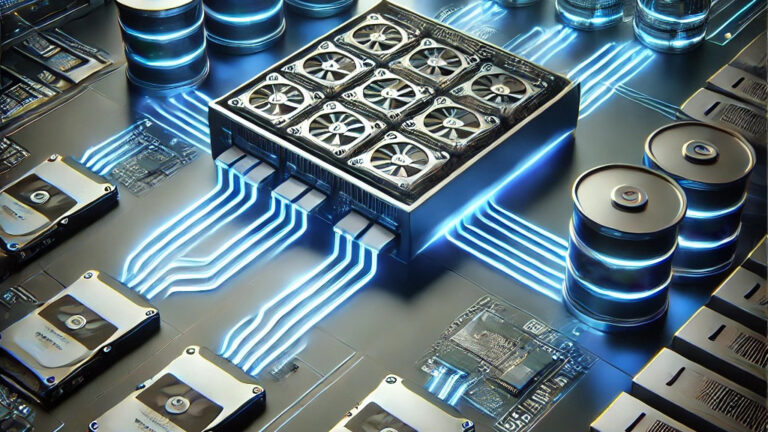

How to Drive Out Cost on Your Storage

February 13, 2025

In an era where data volume is skyrocketing, businesses face the challenge of managing vast amounts of information while keeping costs under control. The key

The Power of High Performance, High-Capacity Tiered Storage for Healthcare PACs

February 6, 2025

By Chris Mellon, StorONE Solution Architect In the healthcare industry, the ability to quickly access and share medical images is critical for providing timely, high-quality